Automating IT Security

IT security’s battle with the hacking community has always been a game of cat and mouse, but it’s becoming increasingly automated.

A study from Forrester recently warned that IT security professionals are becoming increasingly concerned about the rise in cyber crime powered by artificial intelligence (AI).

The study, commissioned by Darktrace, reported that close to 80% of cyber security decision-makers anticipate offensive artificial intelligence (AI) to increase the scale and speed of attacks.

In addition to their quickness, 66% also expect offensive AI to conduct attacks that no human could conceive of. The study warned that these attacks will be stealthy and unpredictable in a way that enables them to evade traditional security approaches that rely on rules and signatures and only reference historical attacks.

According to Forrester, human operators limit the speed with which organisations can detect, interpret and respond to threats. As attackers modify their tactics and beat legacy security tooling, they will move deeper and more quickly into infected networks, the analyst company warned. This lack of speed has serious implications.

Advances in AI

Cyber security decision-makers are most concerned about systems or business interruption, intellectual property or data theft, and reputational damage, but help is here thanks to advances in AI.

PA Consulting’s Lee Howells, an AI and automation expert, and Yannis Kalfoglou, an AI and blockchain expert, believe that the use and capabilities of AI to attack organisations is growing and becoming more sophisticated.

In a recent Computer Weekly article, Howells and Kalfoglou suggested that cyber criminals would inevitably take advantage of AI, and such a move would increase threats to digital security and the volume and sophistication of cyber attacks.

“AI provides multiple opportunities for cyber attacks – from the mundane, such as increasing the speed and volume of attacks, to the sophisticated, such as making attribution and detection harder, impersonating trusted users and deep fakes,” they wrote.

One example of a simple but elegant AI-based attack is Seymour and Tully’s Social Media Automated Phishing and Reconnaissance (SNAP_R). This proof of concept created by security researchers automates the creation of fake tweets with malicious links.

Tailored attacks

According to Howells and Kalfoglou, AI’s ability to analyse large amounts of data at pace means many of these attacks are likely to be uniquely tailored to a specific organisation.

The pair warned that these kinds of highly sophisticated cyber attacks, executed by professional criminal networks leveraging AI and machine learning, will enable attacks to be mounted at a speed and thoroughness that will overwhelm an organisation’s IT security capabilities.

AI-enabled security automation has the potential to combat AI-powered malicious activity. For instance, Howells and Kalfoglou said an organisation could use behaviour-based analytics, deploying the unparalleled pattern-matching capability of machine learning.

“Assuming the appropriate data access consents are in place, the abundance of user behaviour data available from streaming, devices and traditional IT infrastructure gives organisations a sophisticated picture of people’s behaviour,” they wrote.

For Howells and Kalfoglou, user behavioural data can be analysed to help organisations determine what device is being used at a particular time (for example, iPad at 10pm), what activity the user is typically doing at that time (such as processing emails at 10pm), who is the user they interacting with (for example, no video calls after 10pm due to security policy) and what data is being accessed (for example, to stop shared drive access after 10pm).

They add that this behavioural data can be built, maintained and updated in real time by a well-trained machine learning system. Any detected deviations from the normal pattern will be analysed and trigger an alert that could lead to cyber defence mechanisms being deployed.

Cost of a data breach

IBM’s 2019 Cost of a data breach report looked at the relationship between data breach cost and the state of security automation within companies that deploy, or do not deploy, automated security methods and technologies.

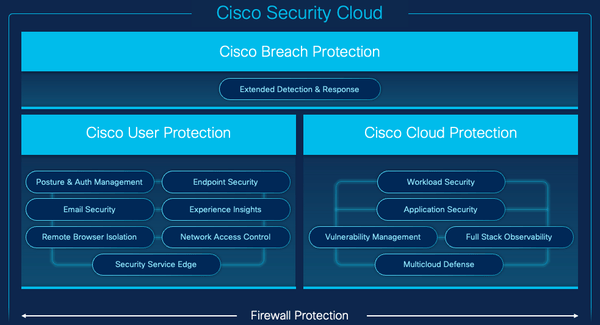

Such security technologies aim to augment or replace human intervention in the identification and containment of cyber exploits or breaches and depend upon artificial intelligence, machine learning, analytics and incident response orchestration.

Having systems in place that automate the detection of and response to malicious activity negates the need for human-driven investigation. IBM’s research found that over half (52%) of companies studied had security automation partially or fully deployed. The average total cost of a data breach was 95% higher in organisations without security automation deployed.

IBM reported that the average total cost of a data breach was $2.65m for organisations that fully deployed security automation. The cost of a data breach in organisations that did not deploy automation was $5.16m, in other words, some $2.51m higher.

Ivana Bartoletti, a cyber risk technical director at Deloitte and a founder of Women Leading in AI, says AI can be deployed in the training of a system to identify even the smallest behaviours of ransomware and malware attacks before it enters the system and then isolate them from that system. She says AI can also be used to automate phishing and data theft detection, which are extremely helpful as they involve a real-time response.

Linking AI and operations to tackle cyber threats

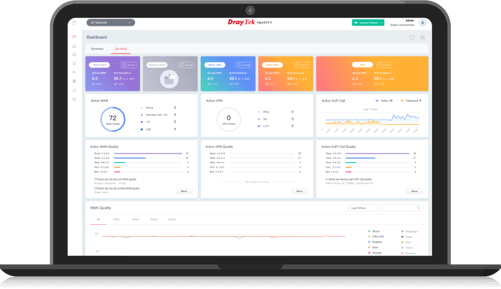

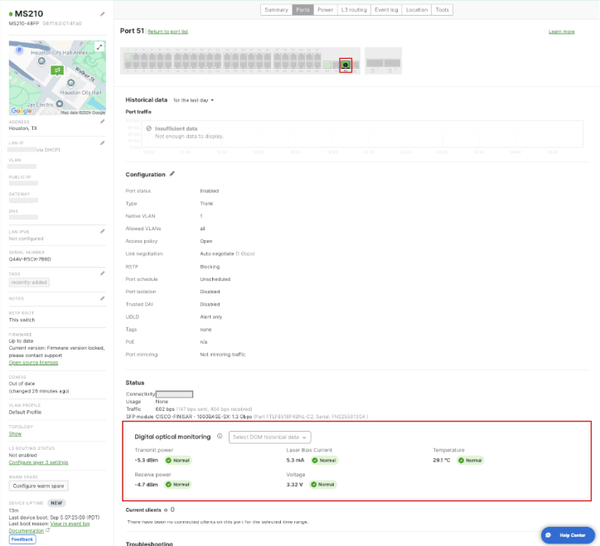

Security professionals are adopting security information and event management (Siem) tools to enable them to take a holistic approach to monitoring IT security. According to a forecast from MarketsandMarkets, the global Siem market size is expected to grow 5.5% annually to $5.5bn by 2025. But not every organisation can justify deploying a full Siem system.

Logically, using AI to trawl masses of data to identify threats is not dissimilar to the techniques used in AIOps, where server, network and application logs are analysed. Analyst Gartner says AIOps is primarily used to support IT operations processes that enable monitoring or observation of IT infrastructure, application behaviour or digital experience.

“Almost always, AIOps platform investments have been justified on the basis of their ability to decrease mean time to problem resolution and the resultant cost reduction,” says the analyst firm.

Gartner analysts wrote in the company’s Market guide for AIOps platforms that the benefits of AIOps include reducing event volumes and false alarms. Getting overwhelmed with false positives is a major headache for IT security, leading to legitimate activities being blocked. As Howells’ and Kalfoglou’s iPad example shows, AI can build an understanding of what constitutes “normal user behaviour”.

AIOps can additionally detect anomalous values in time-series data. This goes beyond merely “knowing” when the iPad was used to try to access shared data after 10pm, against company policy.

Instead, the AI can uncover meaning in hidden data and predict an outcome, and such predictions could show security professionals problems long before a data policy violation is flagged.

AI can also perform root cause analysis using bytecode instrumentation or distributed tracing data along with graph analysis, to understand why a data breach occurred.

However, Deloitte’s Bartoletti warns that over-reliance on AI presents another problem. “As AI improves at safeguarding assets, so too does it improve attacking them,” she says. “As cutting-edge technologies are applied to improve security, cyber criminals are using the same innovations to gain an edge over them. Typical attacks involve gathering system information or sabotaging an AI system by flooding it with requests. AI can augment cyber security so long as organisations know its limitations and have a clear strategy focusing on the present while constantly looking at the evolving threat landscape.”

As the game between IT security professionals and hackers intensifies, the battle will inevitably shift to AI-powered cyber attacks and cyber defences. The question then becomes, whose AI is faster and smarter? Who wins: the cat or the mouse?