Does technology increase the problem of racism and discrimination?

According to a publication from the MIT Technology Review, technology promotes racism. Most facial recognition algorithms discriminate against the Black population. And even certain concepts or technological terminology tend to be offensive, highlighting "white supremacy."

Technology was designed to perpetuate racism. This is pointed out by a recent article in the MIT Technology Review, written by Charlton McIlwain, professor of media, culture and communication at New York University and author of Black Software: The Internet & Racial Justice, From the AfroNet to Black Lives Matter.

"We've designed facial recognition technologies that target criminal suspects on the basis of skin color. We've trained automated risk profiling systems that disproportionately identify Latinx people as illegal immigrants. We've devised credit scoring algorithms that disproportionately identify black people as risks and prevent them from buying homes, getting loans, or finding jobs," McIlwain wrote.

In the article, the author elaborates on the origins of the use of algorithms in politics to win elections, understand the social climate and prepare psychological campaigns to modify the social mood, which in the late 1960s was tense in the United States. These efforts, however, paved the way for large-scale surveillance in the areas where there was most unrest, at the time, the Black community.

According to McIlwain, "this kind of information had helped create what came to be known as 'criminal justice information systems.' They proliferated through the decades, laying the foundation for racial profiling, predictive policing, and racially targeted surveillance. They left behind a legacy that includes millions of black and brown women and men incarcerated."

The threat of races in the pandemic

Contact tracing and threat-mapping technologies designed to monitor and contain the COVID-19 pandemic did not help improve the racial climate. On the contrary, these applications showed a high rate of contagion among Black people, Latinos and the indigenous population.

Although this statistic could be interpreted as a lack of quality and timely medical services for members of the aforementioned communities, the truth is that the information was disclosed as if Blacks, Latinos and indigenous people were a national problem and a threat of contagion. Donald Trump himself made comments in this regard and asked to reinforce the southern border to prevent Mexicans and Latinos from entering his country and increasing the number of COVID-19 patients, which is already quite high.

McIlwain's fear -- and that of other members of the Black community in the United States -- is that the new applications created as a result of the pandemic will be used to recognize protesters to later "quell the threat." Surely, he refers to persecutions and arrests, which may well end in jail, or in disappearances.

"If we don’t want our technology to be used to perpetuate racism, then we must make sure that we don’t conflate social problems like crime or violence or disease with black and brown people. When we do that, we risk turning those people into the problems that we deploy our technology to solve, the threat we design it to eradicate," concludes the author.

The long road ahead for algorithms

Although artificial intelligence and machine learning feed applications to enrich them, the truth is that the original programming is made by a human (or several). Who defines, initially, the parameters for the algorithms, are the people who created the program or application. The lack of well-defined criteria can result in generalizations, and this can lead to discriminatory or racist actions.

The British newspaper The Guardian reported, a few years ago, that one of Google's algorithms auto-tagged images of Black people like gorillas. Other companies, such as IBM and Amazon, avoid using facial recognition technology because of its discriminatory tendencies towards Black people, especially women.

"We believe now is the time to begin a national dialogue on whether and how facial recognition technology should be employed by domestic law enforcement agencies," IBM executive director Arvind Krishna wrote in a letter sent to Congress in June. "[T]he fight against racism is as urgent as ever," said Krishna, while announcing that IBM has ended its "general" facial recognition products and will not endorse the use of any technology for "mass surveillance, racial discrimination and human rights violations."

If we consider that the the difference in error rate between identifying a white man and a Black woman is 34% in the case of IBM software, according to a study by the MIT Media Lab, IBM's decision not only seems fair from the point of view racially speaking, it is also a recognition of the path that lies ahead in programming increasingly precise algorithms.

The 2018 MIT Media Lab study concluded that, although the average precision of these products ranges between 93.7% and 87.9%, the differences based on skin color and gender are notable; 93% of the errors made by Microsoft's product affected people with dark skin, and 95% of the errors made by Face ++, a Chinese alternative, concerned women.

Joy Buolamwini, co-author of the MIT study and founder of the Algorithmic Justice League, sees IBM's initiative as a first step in holding companies accountable and promoting fair and accountable artificial intelligence. "This is a welcome recognition that facial recognition technology, especially as deployed by police, has been used to undermine human rights, and to harm Black people specifically, as well as Indigenous people and other People of Color,"she said.

Time to change IT terminology

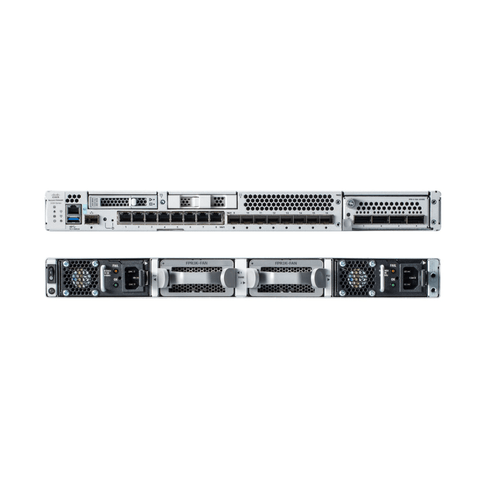

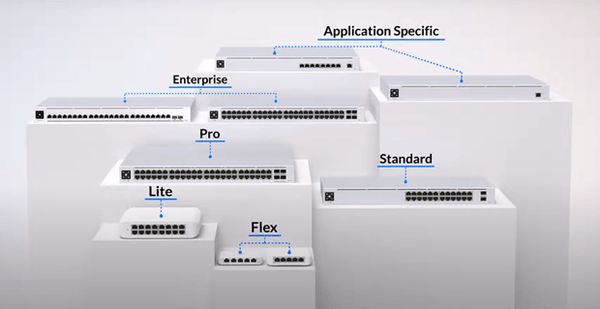

Another issue related to discrimination in the IT industry has to do with the language used to define certain components of a network or systems architecture. Concepts like master/slave are being reformulated to change for a less objectionable terminology. The same will happen with the concepts of blacklists/whitelists. Now, developers will have terms like leader/follower, and allowed list/blocked list.

The Linux open source operating system will include new inclusive terminology in its code and documentation. Linux kernel maintainer Linus Torvalds approved this new terminology on July 10, according to ZDNet.

GitHub, a Microsoft-owned software development company, also announced a few weeks ago that it is working to remove such terms from its coding.

These actions demonstrate the commitment of the technology industry to create tools that help the growth of society, with inclusive systems and applications and technologies that help combat discrimination instead of fomenting racism.